from rabbitmq to shared models: a 2-day debugging journey in a nutshell.

i was building a code execution platform (and have not finished yet) aka judge system (as a side project) with what seemed like a clean, well put together microservices setup. clean boundaries, loose coupling, independent services - all the buzzwords were checked off. then rabbitmq decided to teach me a lesson about the gap between theory and practice.

i needed rabbitmq for async communication between my services.

this is the story of how a simple message deserialization¹ issue led me to question some "sacred" microservices principles and embrace a solution that would make purists cringe. spoiler alert: it worked.

the problem: when "best practices" meet reality

here's the setup (basically): i have a submissionService that processes code submissions and needs to send execution requests to an executionService via rabbitmq. classic producer-consumer pattern, nothing fancy.

the submissionService was sending messages that looked something like this:

{

"submissionRequestDto": {

"userid": 1,

"problemid": 2,

"programminglanguage": "python",

"code": "print('hello, marouane')",

"timelimit": 1000,

"memorylimit": 2000

}

}

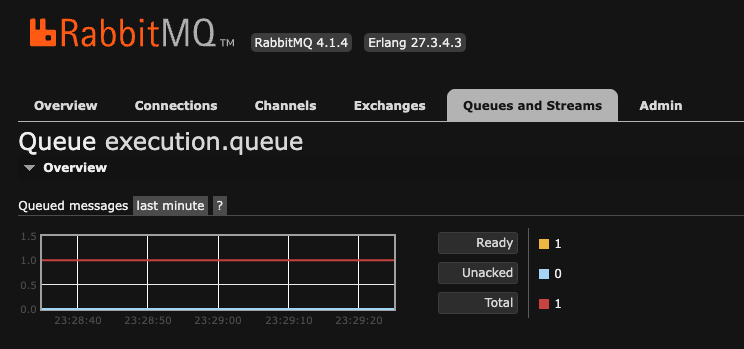

everything seemed perfect. the message was being sent, the queue was receiving it, but when the executionService tried to consume it... boom! deserialization failure.

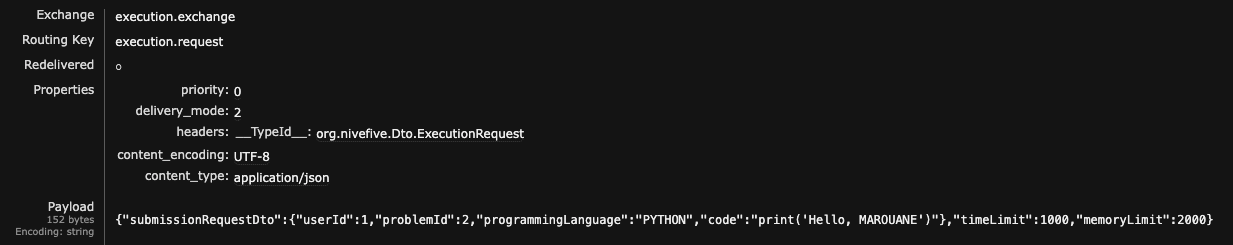

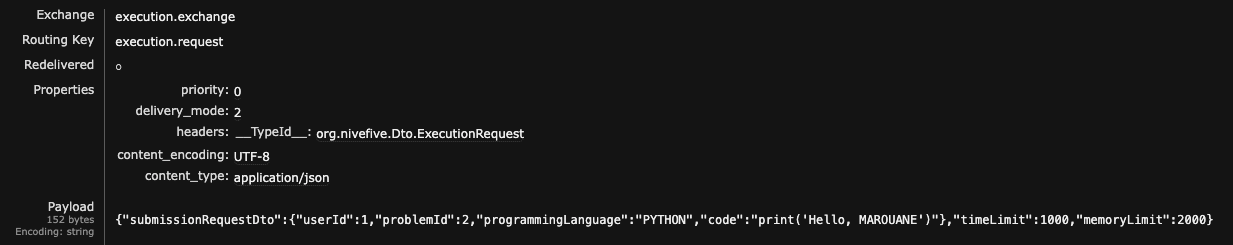

the root cause: headers.

after some debugging time (and a lot of claude prompting - it did not help), i found the real villain: rabbitmq's __typeid__ header (one of the properties). this little piece of metadata tells jackson¹ how to deserialize json back into java objects.

this image though shows the message after the fix, but still the same format.

this image though shows the message after the fix, but still the same format.

here's where my "perfectly decoupled" architecture bit me:

the producer (submissionservice) was setting:

__typeid__: org.submissionservice.dto.executionrequest

but the consumer (executionservice) was expecting:

__typeid__: org.executionservice.dto.executionrequest

same dto, different packages. jackson looked at that header, tried to find org.submissionservice.dto.executionrequest in the executionservice, came up empty, and basically said "nope!" and gave back a ton of error logs - my screen did not help either inspecting those shenanigans.

i had copied the same dto class to both services to maintain loose coupling. different package names, identical fields, and now my "independent" services couldn't talk to each other.

architecture goals: achieved. functionality: not so much.

first attempt

my initial reaction was the classic : "i'll make this work without changing my architecture"

i dove into jackson configuration trying to ignore typeid headers, attempting manual json parsing and generally fighting against every framework decision. the code started looking like spaghetti

after two days of this nonsense, i had to face the truth: i was spending more energy fighting the tools than solving the actual problem.

the realization: pragmatism

that's when i had my moment of clarity (turned out later to be std stuff!).

what if i created a shared library for common dtos and enums?

now, before you start thinking about tight coupling and microservices violations, let me address the bigggg elephant in the room: yes, this creates coupling between services. yes, it goes against some microservices philosophy (orthodoxy and principles). and yes, i decided to do it anyway.

sometimes the best architecture decision is the one that lets you ship "working" (atm) software instead of maintaining theoretical purity.

the solution: shared library

so i built a shared-models project, which is just a plain java library. no spring boot, no application context, no annotations. just a bunch of pojos.

my monorepo structure now looks something like this:

judge-system/

├── api-gateway/

├── docs/

├── execution-worker/

├── infra/

├── problem-service/

├── shared-models/ # our new shared library

├── submission-service/

└── user-service/

the shared-models project has a simple maven structure:

<project xmlns="http://maven.apache.org/pom/4.0.0"

xmlns:xsi="http://www.w3.org/2001/xmlschema-instance"

xsi:schemalocation="http://maven.apache.org/pom/4.0.0

http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelversion>4.0.0</modelversion>

<groupid>org.nivefive</groupid>

<artifactid>shared-models</artifactid>

<version>1.0-snapshot</version>

<packaging>jar</packaging>

<name>shared-models</name>

<properties>

<project.build.sourceencoding>utf-8</project.build.sourceencoding>

</properties>

<dependencies>

<dependency>

<groupid>junit</groupid>

<artifactid>junit</artifactid>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>

just a basic maven project that contains java classes.

implementation: simple solution

step 1: create the shared dtos

i moved all the common dtos to the shared-models project:

package org.nivefive.shared.dto;

public class executionrequest {

private long userid;

private long problemid;

private string programminglanguage;

private string code;

private integer timelimit;

private integer memorylimit;

// constructors, getters, setters...

}

step 2: maven

cd shared-models

mvn clean install

this one command builds the jar and installs it in your local maven repository (this one .m2/repository).

step 3: add dependency to services

in both submissionservice and executionservice, i added this dependency:

<dependency>

<groupid>org.nivefive</groupid>

<artifactid>shared-models</artifactid>

<version>1.0-snapshot</version>

</dependency>

step 4: update import statements

changed the imports in both services:

// before

import org.submissionservice.dto.executionrequest;

// after

import org.nivefive.shared.dto.executionrequest;

now this image should make some sense - notice headers: TypeId

now this image should make some sense - notice headers: TypeId

results: it works

and just like that, everything worked. the rabbitmq messages flowed seamlessly between services. jackson found the classes it was looking for. the typeid headers made sense again.

i eliminated two categories of problems:

- deserialization issue

- nightmare of keeping duplicate dtos in sync

hopefully this does not create other problems.

one change, rebuild, done.

the bottom line

if you're wrestling with similar issues - rabbitmq deserialization failures, dto duplication, or just the general pain of keeping data structures consistent across services, consider the shared library approach.

yes, it violates some microservices principles. no, it's not the "pure" solution. but it works.

¹ : deserialization in my context is converting a payload (think of it as a json string) into a java object. jackson takes care of this in my case - ofc you can do it manually - in other languages, other libraries handle this. for instance, rustecans use serde, pythonistas use pydantic (or json).